Computation, Language, and

Meaning

Band of Researchers

What do all of the languages of the world have in common? Why and how did these linguistic universals arise? What do they tell us about human cognition and how can we harness them to build better language technologies?

At CLMBR, we address these and related questions from a computational and experimental perspective. We are a lab hosted at the Linguistics Department of the University of Washington, as part of the larger Treehouse computational linguistics lab.

News

On sabbatical in Fall 2025 and Spring 2026. Taking on fewer students, review requests, and the like this year.

Updated papers from 2025 and January 2026. The rest of the website (e.g. recent alumni / theses) will follow before too long :). [January 2026]

Upcoming invited talks:

- Northeastern University Philosophy and Technology Colloquium (September 2025)

- Georgetown University Linguistics Colloquium (October 2025)

Filtered Corpus Training paper accepted at TACL; congratulations to Abhinav and the whole team! Some other new papers also updated below. [September 2024]

Congratulations to Dr. C.M. Downey, who will be starting as an Assistant Professor of Linguistics and Data Science at the University of Rochester this Fall! [February / June 2024]

Upcoming invited talks:

- 9th CSLI Workshop on Logic, Rationality and Intelligent Interaction (June 2024)

- North East Linguistics Society (NELS55; October 2024)

- Workshop on AI and cognitive science (Götegorb; November 2024)

- Workshop on self-assembling games (Irvine; January 2025)

Tenured! 🤗 Very grateful for all the wonderful mentors and students who have helped along the way. [April 2024]

Congratulations to the brilliant Dr. Naomi Tachikawa Shapiro for successfully defending her dissertation (the first in the group)! Naomi is now off to a postdoc in computational psycholinguistics at Nijmegen! [Dec 2023]

Five papers accepted at various EMNLP Workshops; congratulations to everyone involved! [Oct 2023]

- Evaluating Transformer's Ability to Learn Mildly Context-Sensitive Languages at BlackboxNLP

- Embedding structure matters: Comparing methods to adapt multilingual vocabularies to new languages at Multilingual Representation Learning (MRL): this also won the best paper award!

- Learning to translate by learning to communicate at Multilingual Representation Learning (MRL)

- Two papers (to appear soon) contributing to the Collaborative Benchmarking Task at the GenBench workshop on generalisation in NLP

Upcoming invited talks:

- Workshop on internal and external pressures shaping language @ ESSLLI 2023

- Ohio State Linguistics Colloquium (Sept 2023)

- Workshop on computational approaches to language typology and evolution (Oct 2023)

- UMass Amherst Linguistics Colloquium (Dec 2023)

- University of Edinburgh Center for Langauge Evolution (Winter 2024)

Congratulations to everyone who graduated in Spring 2023! CLMSer Yifan Jiang is off to a PhD in CS at Waterloo! Our first batch of undergrads has also graduated: Minghe Zhang is off to an MS in Stats at STanford and we are lucky to have Leroy Wang and Gladys Wang staying on board for the CLMS program. Congratulations everyone!

Several new papers and preprints posted [February 2023]

Upcoming invited talks:

- McDonnell Foundation workshop on monotonicity @ Brown (Dec 2022)

- UC Irvine Language Science Colloquium (Feb 2023)

- MIT Breakstone Speaker Series on Language, Mind and Computation (March 2023)

- UC Davis Linguistics Colloquium (May 2023)

Two papers accepted at BlackboxNLP! [October 2022]

Emergent Communication Fine-tuning (EC-FT) for Pretrained Language Models gets runner-up best paper at the Emergent Communication Workshop @ ICLR! [April 2022]

Invited talks at Ettinger lab @ U Chicago and CLASP @ Gothenburg [March 2022]

Two ACL papers and a SALT paper accepted! [February 2022]

Added several publications and preprints from the second half of 2021. [January 2022]

Two new papers! [May 2021]

- "Language models use monotonicity to assess NPI licensing" at Findings of ACL, with Jaap Jumelet, Milica Denic, Jakub Szymanik, Dieuwke Hupkes

- "Quantifiers satisfying semantic universals are simpler" at CogSci 2021, with Iris van de Pol, Paul Lodder, Leendert van Maanen, Jakub Szymanik

Three new preprints posted! See the Publications section below for more info and links. (April 2021)

"Referential and General Calls in Primate Semantics" published in Linguistics and Philosophy

Invited talks at TedLab@MIT (May 2021) and Montana State Computer Science Colloquium (Feb 2021)

Two papers and an extended abstract accepted at BlackboxNLP! (September 2020)

- "Probing for Multilingual Numerical Understanding in Transformer-Based Language Models" by Devin Johnson, Denise Mak, Drew Barker, and Lexi Loessberg-Zahl

- "Linguistically-Informed Transformations (LIT): A Method for Automatically Generating Contrast Sets" by Chuanrong Li, Lin Shengshuo, Zeyu Liu, Xinyi Wu, Xuhui Zhou, and Shane Steinert-Threlkeld

- "Mighty Morpho-Probing Models" (extended abstract) by Naomi Tachikawa Shapiro, Amandalynne Paullada, and Shane Steinert-Threlkeld

Invited talks at Human Interactivity and Language Lab (June 2020) and the Computation and Language Lab (October 2020)

Shane is co-organizing a workshop on Computational

and Experimental Explanations in Semantics and Pragmatics, co-located with ESSLLI (August 2020 2021)

Daniel is co-organizing a track on deep learning in search at NIST's TREC 2020 (Nov 2020)

"On the Spontaneous Emergence of Discrete and Compositional Singals" (with Nur Lan and Emmanuel Chemla) accepted at ACL; preprint available soon (April 2020)

"Complexity/informativeness trade-off in the domain of indefinite pronouns"

presented at Semantics and Linguistic Theory (SALT 30) (April August 2020)

Semantic Expressivism for Epistemic Modals appears online at Linguistics and Philosophy (March 2020)

Invited talk at Language Change: Theoretical and Experimental Perspectives at the

Hebrew Univeristy of Jerusalem (March 2020 January 2021)

Most, but not more than half is proportion-dependent and sensitive to individual differences appears in the proceedings of Sinn und Bedeutung (SuB 24) (February 2020)

Daniel is teaching Deep Learning in Search at ACM SIGIR/SIGKDD Africa Summer School on Machine Learning for Data Mining and Search (January 2020)

"Quantifiers in natural language optimize the simplicity/informativeness trade-off" presented at the 22nd Amsterdam Colloquium (December 2019)

"An Explanation of the Veridical Uniformity Universal" appears online at Journal of Semantics (open access) (December 2019)

"Ease of Learning Explains Semantic Universals" appears online at Cognition (November 2019)

"Learnability and Semantic Universals" appears online at Semantics & Pragmatics (November 2019)

"Towards the Emergence of Non-trivial Compositionality" accepted at Philosophy of Science (September 2019)

Shane presents two papers—"The evolution of monotone quantifiers via iterated learning" and Complexity and learnability in the explanation of semantic universals"—at CogSci (July 2019)

Lewis presents Neural Models of the Psychosemantics of "most" at Cognitive Modeling and Computational Linguistics (CMCL) (June 2019)

Research

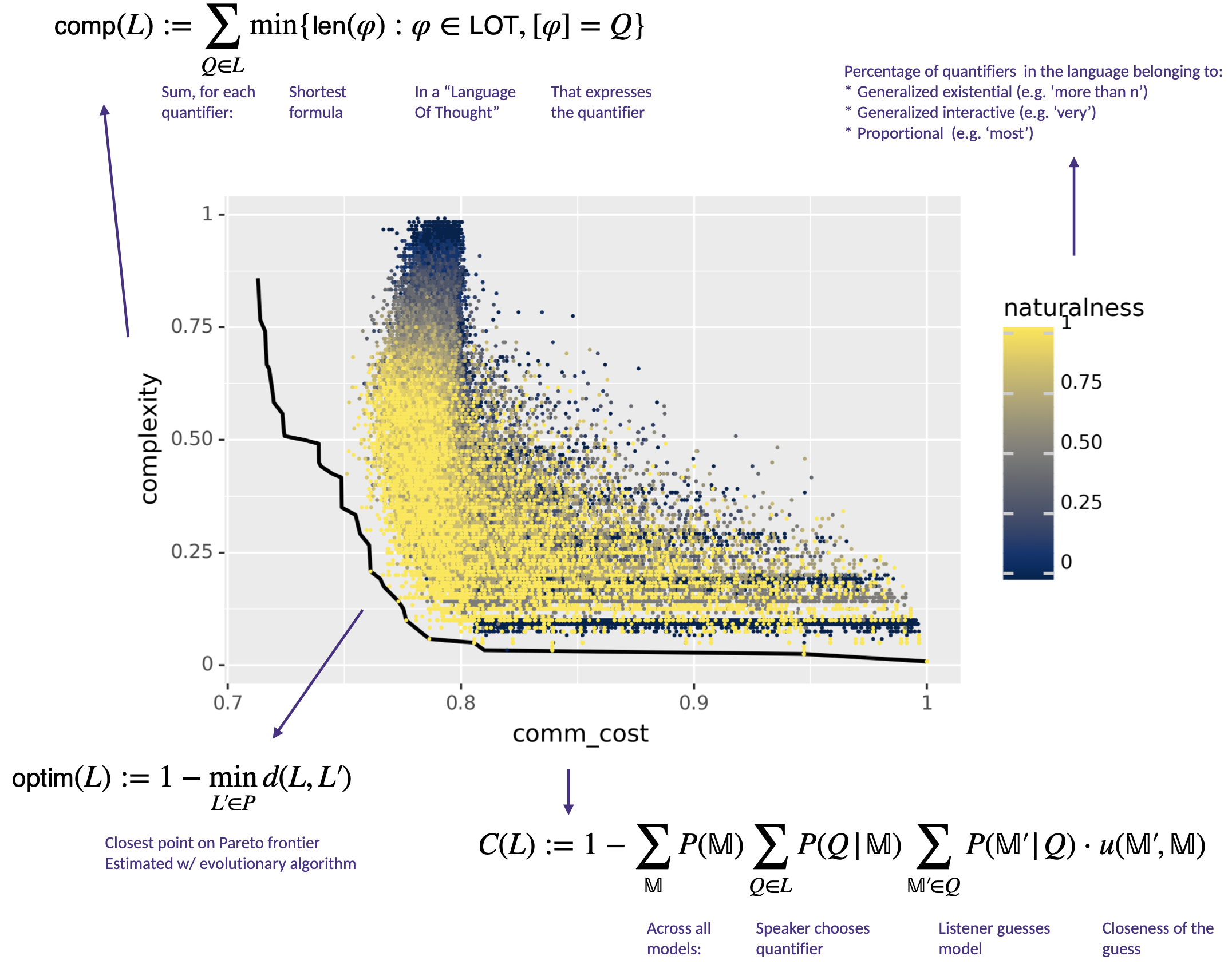

Semantic Universals

A major line of recent work has involved explaining semantic universals: shared properties of meaning across all natural languages. We have been using machine learning to argue that the languages of the world express meanings that are easier to learn. Ongoing work extends this approach to more linguistic domains, compares it to other candidate explanations, and integrates learnability with models of language change/evolution.

Representative papers:

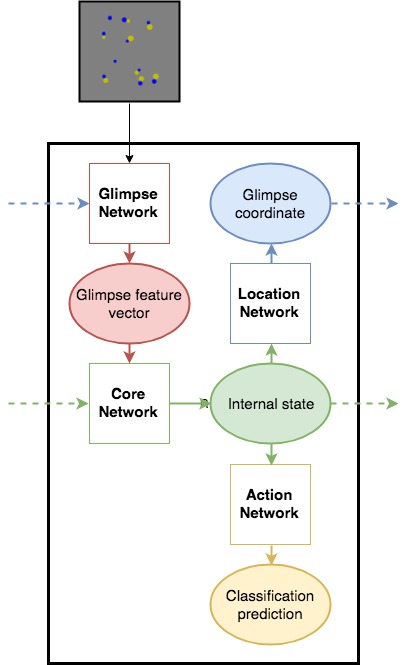

Emergent Communication

By placing artificial agents in simulated environments, we can use reinforcement learning to study the emergence of fundamental features of human language. A particular focus has been on non-trivial compositionality: rudimentary forms of hierarchical structure that exhibit one linguistic item modifying another, as in non-intersective adjectives. This research line has both theoretical and practical interest, since autonomous agents such as vehicles may benefit from learning their own communication system.

Representative papers:

Cognitive Science and NLP

We also conduct studies investigating the cognitive underpinnings of language understanding. How do speakers represent meanings internally and how do these representations interface with other cognitive modules?

Moreover, as natural language processing tools become increasingly more powerful, we believe that two-way interaction between cognitive science and NLP will be increasingly important. On the one hand: insights from human language understanding can help us analyze, understand, and build better machines. On the other hand: increasing sophistication in modeling from NLP can provide inspiration and insights in modeling human behavior. Our lab has ongoing projects in both directions.

Representative papers:

People

Principal Investigator

Shane Steinert-Threlkeld

Assistant Professor, Linguistics

Personal Website

shanest AT uw DOT edu

Shane is an Associate Professor in Linguistics, where he directs the CLMBR group and teaches in the CLMS program. When not researching or teaching, he spends as much time as possible climbing rocks.

Graduate Students

Danielle Celone

Master's Student in Computational Linguistics

dcelon AT uw DOT edu

Danielle’s research applies techniques from computational linguistics to non-human animal communication systems. She's interested in formal language theory, bioacoustics, syntax, and semantics. She likes movies, birdwatching, science fiction, and road trips.

Iskar Deng

Master's Student in Computational Linguistics

hd49 AT uw DOT edu

Iskar is interested in cognitive differences between humans and language models, especially in typological patterns. In his free time, he enjoys playing baseball and following the Seattle Mariners.

Chris Haberland

PhD Student in Computational Linguistics

Personal Website

haberc_ATT uw_DOTT [educ. abbrev.]

Chris studies multimodal language models and natural languages through controlled computational experiments. He is interested in advancing reinforcement learning techniques to emulate language evolution, acquisition, and processing. Chris is also working to build NLP resources for Italic and Mexican languages.

Jiamu Luo

Master's Student in Computational Linguistics

jiamuluo AT uw DOT edu

Jiamu's overarching research question is how language models correspond to human language systems. He is also interested in developing more human-comparable models for that purpose. In his free time, he can be found brewing coffee, playing badminton, or watching musicals.

Cassie Maz

PhD Student in Computational Linguistics

Personal Website

cassam5 AT uw DOT edu

Cassie's main research interest is the demand for and development of sign langauge translation tools. Outside of research, she enjoys watching TV and the Sisyphean task of finishing books on her evergrowing reading list.

Amanda Popadich

PhD Student in Computational Linguistics

popadich AT uw DOT edu

Amanda's work explores how humans and language models process ambiguous language and make inferences. She uses methods from experimental pragmatics and machine learning. Outside of research, she can usually be found skiing or hiking.

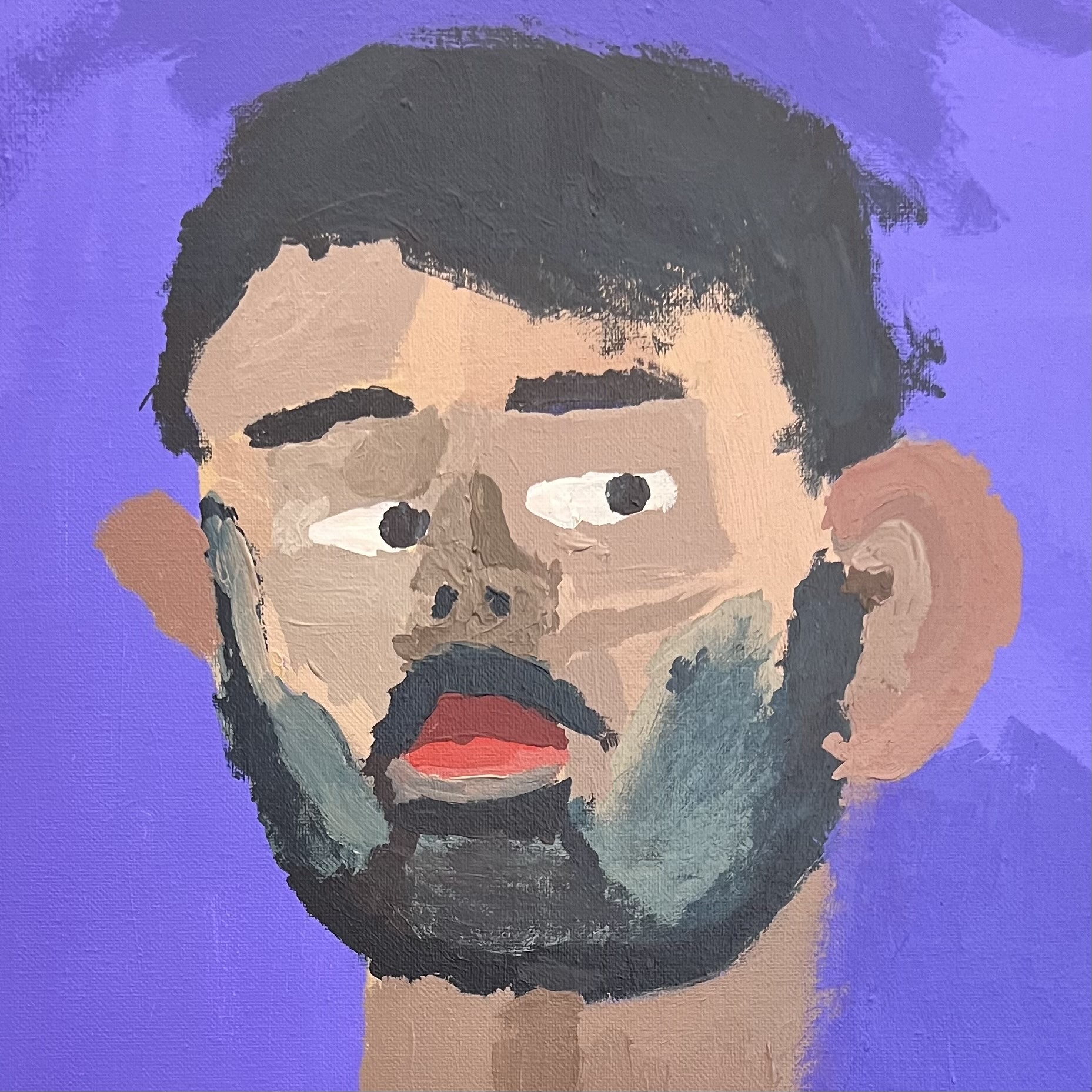

Jingnong Qu

PhD Student in Computational Linguistics

Personal Website

jingnong AT uw DOT edu

Jingnong is interested in applying computational methods to studying natural language semantics. He enjoys visual arts, music, driving, and comedy outside of his research. His image is his first attempt at a self-portrait.

Undergraduate Students

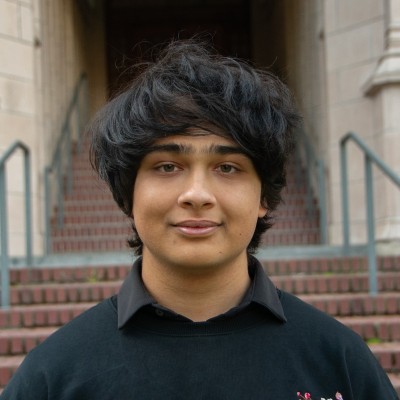

Ashvin Ranjan

Undergraduate Student in Computer Science, Minor in Linguistics & Japanese

ar31 AT uw DOT edu

Ashvin's work focuses a variety of topics in computational linguistics, from Information theory to machine learning architecture and large-scale text processing. Aside from research, he enjoys photography, listening to music, and video games.

Alumni

PhD

- Naomi Tachikawa Shapiro (first job: Postdoc in Computational Psycholinguistics @ Nijmegen)

- C.M. Downey (first job: Assistant Professor of Linguistics and Data Science @ University of Rochester)

M.S. Thesis Students

-

Daniel Campos (PhD student in CS @ UIUC)

CLMS Thesis: Explorations In Curriculum Learning Methods For Training Language Models -

Paige Finkelstein (Software Engineer @

turn.io)

CLMS Thesis: Human-assisted Neural Machine Translation: Harnessing Human Feedback for Machine Translation -

Benny Longwill (Research Engineer @ ETS)

CLMS Thesis: The Suitability of Generative Adversarial Training for BERT Natural Language Generation -

Chih-chan Tien (PhD student in CS @ U Chicago)

CLMS Thesis: Bilingual alignment transfers to multilingual alignment for unsupervised parallel text mining -

Devin Johnson (PhD student in Linguistics @

Northwestern)

CLMS Thesis: Semantic Universals in Bayesian Learning of Quantifiers -

Wes Rose (Language Engineer @

Amazon)

CLMS Thesis: Toward the Emergence of Quantifiers -

Shunjie Wang (Data Scientist,

AI/NLP @ Happify Health)

CLMS Thesis: Evaluating Transformer's Ability to Learn Mildly Context-Sensitive Languages -

Jessica Sweeney

(Data Scientist @ Coastal Community Bank)

CLMS Thesis: Comparing Methods for Automatic Identification of Mislabeled Data -

Megan Barnes (Software Engineer @ Google)

MS Thesis: Latent Compositional Representations for English Function Word Comprehension -

Katya Simpson (Senior Data Scientist @ Twitter)

CLMS Thesis: "Obama never said that": Evaluating fact-checks for topical consistency and quality -

Nathaniel Imel (PhD student, Logic and

Philosophy of Science @ UC Irvine)

CLMS Thesis: Modals in Natural Language Optimize the Simplicity/Informativeness Trade-Off -

Meheresh Yeditha (Software Engineer @ Rippling)

CLMS Thesis: An Investigation Into Supervision for Seq2Seq Techniques for Natural Language to Code Translation -

Yifan Jiang (PhD student, Computer Science @ Waterloo)

CLMS Thesis: The Weighted Mobius Score: A Unified Framework for Feature Attribution -

Abhinav Patil (PhD student, Cognitive Science @ The Johns Hopkins University)

CLMS Thesis: Language Models can Generalize from Indirect Evidence: Evidence from Filtered Corpus Training (FICT) -

Lindsay Paige Skinner

CLMS Thesis: Convexity is a Fundamental Feature of Efficient Semantic Compression in Probability Spaces -

Amélie Thu Tâm Reymond

CLMS Thesis: mSCAN - a Multilingual Dataset for Compositional Generalization Evaluation -

Leroy Wang

CLMS Thesis: Complexity of In-Context Concept Learning in Language Models -

Yao-Fei Cheng (AI Resident @ Apple)

CLMS Thesis: Beyond Memorization: Evaluating Length-Generalization in Transformer-based Language Models -

Dwija Parikh (Linguistic Engineer @ Meta)

CLMS Thesis: Bridging the Gap: Adaptation Approaches for Under-Resourced Language Families

Publications

Preprints

Differences in Typological Alignment in Language Models' Treatment of Differential Argument Marking

Iskar Deng, Nathalia Xu, Shane Steinert-Threlkeld

preprint

When Efficient Communication Explains Convexity

Ashvin Ranjan, Shane Steinert-Threlkeld

preprint

Deontic priority in the lexicalization of

impossibility modals

Wataru Uegaki, Anne Mucha, Nathaniel Imel, Shane Steinert-Threlkeld

preprint

2026

An Efficient Communication Analysis of Modal Typology

Nathaniel Imel, Qingxia Guo, Shane Steinert-Threlkeld

Open Mind

official

preprint

Anti-Babel: Three Degrees of Interspecies Comprehension

Philippe Schlenker, Camille Coye, Ambre Salis, Shane Steinert-Threlkeld, Lucie Ravaux, Emmanuel Chemla

Mind & Language

official

preprint

2025

Ancestral Meanings: A Prelude to Evolutionary Animal Linguistics

Philippe Schlenker, Christina Pawlowitsch, Luc H. Arnal, Keny Chatain, Lucie Ravaux, Robin Ryder, Ambre Salis, Shane Steinert-Threlkeld, Léo Wang, Emmanuel Chemla

Linguistics and Philosophy, 48(5): 823–878

official

preprint

Second-Order Zipf's Law for Word Co-Occurrences

Nicolas Guerin, Shane Steinert-Threlkeld, Robin J. Ryder, Emmanuel Chemla

Open Mind, 9: 1138–1157

official

The Unnatural Language ToolKit (ULTK)

Nathaniel Imel, Christopher Haberland, Shane Steinert-Threlkeld

Society for Computation in Linguistics (SCiL), vol. 8, no. 1

official

Minimization of Boolean Complexity in In-Context Concept Learning

Leroy Z. Wang, R. Thomas McCoy, Shane Steinert-Threlkeld

CogInterp: Interpreting Cognition in Deep Learning Models

official

Quantifiers That Are More Monotone Are Easier to Learn

Christopher Haberland, Shane Steinert-Threlkeld

Semantics and Linguistic Theory (SALT 35)

2024

Filtered Corpus Training (FiCT) Shows that Language Models can Generalize from Indirect Evidence

Abhinav Patil, Jaap Jumelet, Yu Ying Chiu, Andy Lapastora, Peter Shen, Lexie Wang, Clevis Willrich, Shane Steinert-Threlkeld

Transactions of the Association for Computational Linguistics (TACL)

official

preprint

Targeted Multilingual Adaptation for Low-resource Language Families

C.M. Downey, Terra Blevins, Dhwani Serai, Dwija Parikh, Shane Steinert-Threlkeld

Findings of EMNLP

official

preprint

The Impact of Syntactic and Semantic Proximity on Machine Translation with Back-Translation

Nicolas Guerin, Emmanuel Chemla, Shane Steinert-Threlkeld

Transactions on Machine Learning Research (TMLR)

official

preprint

Iconic Artificial Language Learning in the Field: An Experiment with San Martín Peras Mixtec Speakers

Naomi Tachikawa Shapiro, Andrew Hedding, Shane Steinert-Threlkeld

CogSci

official

Minimal Compositionality versus Bird Implicatures: Two Theories of ABC-D Sequences in Japanese Tits

Philippe Schlenker, Ambre Salis, Maël Leroux, Camille Coye, Luigi Rizzi, Shane Steinert-Threlkeld, Emmanuel Chemla

Biological Reviews

official

preprint

Limitations of a modal analysis of before and after

Toshiyuki Ogihara, Shane Steinert-Threlkeld

Semantics & Pragmatics

official

2023

Learning to translate by learning to communicate

C.M. Downey, Leo Z. Liu, Xuhui Zhou, Shane Steinert-Threlkeld

Multilingual Representation Learning (MRL 2023)

official

preprint

code

Embedding structure matters: Comparing methods to adapt multilingual vocabularies to new languages

C.M. Downey, Terra Blevins, Nora Goldfine, Shane Steinert-Threlkeld

Multilingual Representation Learning (MRL 2023), best paper award

official

preprint

Evaluating Transformer's Ability to Learn Mildly Context-Sensitive Languages

Shunjie Wang, Shane Steinert-Threlkeld

BlackboxNLP

official

preprint

mSCAN: A Dataset for Multilingual Compositional Generalisation Evaluation

Amelie Raymond, Shane Steinert-Threlkeld

GenBench Workshop

official

GQG: Generalized Quantifier Generalization - A Dataset for Evaluating Quantifier Semantics Understanding in Language Models

Leroy Zhifei Wang, Shane Steinert-Threlkeld

GenBench Workshop

official

Iconic Artificial Language Learning: A Conceptual Replication with English Speakers

Naomi Tachikawa Shapiro, Shane Steinert-Threlkeld

CogSci

official

preprint

A Semantic Universal for Modality

Shane Steinert-Threlkeld, Nathaniel Imel, Qingxia Guo

Semantics & Pragmatics

official

preprint

Quantifiers Satisfying

Semantic Universals Have Shorter Minimal Description Length

Iris van de Pol, Paul Lodder, Leendert van Maanen, Shane Steinert-Threlkeld, Jakub

Szymanik

Cognition

official

code

Uncovering the structure of semantic

representations using a computational model of decision-making

Sonia Ramotowska, Shane Steinert-Threlkeld, Leendert Van Maanen, Jakub Szymanik

Cognitive Science

official

code + data

2022

Probing for Understanding of

English Verb Classes and Alternations in Large Pre-trained Language Models

David K. Yi, James V. Bruno, Jiayu Han, Peter Zukerman, Shane Steinert-Threlkeld

BlackboxNLP

official preprint

code

Testing Pre-trained Language

Models' Understanding of Distributivity via Causal Mediation Analysis

Pangbo Ban, Yifan Jiang, Tianran Liu, Shane Steinert-Threlkeld

BlackboxNLP

official preprint

code

Beyond Anthropocentrism in Comparative

Cognition: Recentering Animal Linguistics

Philippe Schlenker, Camille Coye, Shane Steinert-Threlkeld, Nathan Klinedinst, Emmanuel

Chemla

Cognitive Science

official preprint

Modals semantic universals optimize the

simplicity/informativeness trade-off

Nathaniel Imel, Shane Steinert-Threlkeld

SALT

official

preprint

code

A Database for Modal Semantic

Typology

Qingxia Guo, Nathaniel Imel, Shane Steinert-Threlkeld

SIGTYP

official website

A Masked Segmental Language

Model for Unsupervised Natural Language Segmentation

C.M. Downey, Fei Xia, Gina-Anne Levow, Shane Steinert-Threlkeld

SIGMORPHON

official preprint

code

Indefinite pronouns optimize the

simplicity/informativeness trade-off

Milica Denić, Shane Steinert-Threlkeld, Jakub Szymanik

Cognitive Science

official preprint

code

Emergent Communication Fine-tuning

(EC-FT) for Pretrained Language Models

Shane Steinert-Threlkeld, Xuhui Zhou, Zeyu Liu, C.M. Downey

Emergent Communication Workshop (EmeCom 5) @ ICLR

Runner-up best paper award

official

code

Bilingual alignment transfers to multilingual

alignment for unsupervised parallel text mining

Chih-chan Tien, Shane Steinert-Threlkeld

ACL

official preprint

code

Multilingual unsupervised sequence

segmentation transfers to extremely low-resource languages

C.M. Downey, Shannon Drizin, Levon Haroutunian, Shivin Thukral

ACL

official

preprint

code

Explaining semantic typology, forms

and all

Shane Steinert-Threlkeld

Trends in Cognitive Sciences

official

2021

Quantifiers in Natural Language: Efficient

Communication and Degrees of Semantic Universals

Shane Steinert-Threlkeld

Entropy

official (open

access) code

A multilabel approach to

morphosyntactic probing

Naomi Tachikawa Shapiro, Amandalynne Paullada, Shane Steinert-Threlkeld

Findings of EMNLP

official preprint

code

Monotone Quantifiers Emerge via Iterated

Learning

Fausto Carcassi, Shane Steinert-Threlkeld, Jakub Szymanik

Cognitive Science

official (open

access) preprint code

Language Models Use

Monotonicity to Assess NPI Licensing

Jaap Jumelet, Milica Denic, Jakub Szymanik, Dieuwke Hupkes, Shane Steinert-Threlkeld

Findings of ACL

official preprint code

Quantifiers satisfying semantic

universals are simpler

Iris van de Pol, Paul Lodder, Leendert van Maanen, Shane Steinert-Threlkeld, Jakub

Szymanik

CogSci 2021

official preprint

code

How social networks affect the

repression-dissent puzzle

Shane Steinert-Threlkeld, Zachary Steinert-Threlkeld

PLoS One

official (open access) code

Referential and General Calls

in

Primate Semantics

Shane Steinert-Threlkeld, Philippe Schlenker, Emmanuel Chemla

Linguistics and Philosophy

official preprint

code

2020

Linguistically-Informed Transformations

(LIT): A

Method for Automatically Generating Contrast Sets

Chuanrong Li, Lin Shengshuo, Zeyu Liu, Xinyi Wu, Xuhui Zhou, and Shane

Steinert-Threlkeld,

BlackboxNLP

official

preprint

code

Probing for Multilingual Numerical

Understanding in

Transformer-Based Language Models

Devin Johnson, Denise Mak, Drew Barker, and Lexi Loessberg-Zahl,

BlackboxNLP

official

preprint

code

Ease of

Learning

Explains Semantic Universals

Shane Steinert-Threlkeld and Jakub Szymanik,

Cognition, vol 195, no. XX, pp. XX.

official preprint code

On the Spontaneous

Emergence of

Discrete and Compositional Singals

Nur Lan, Emmanuel Chemla, Shane Steinert-Threlkeld, Proceedings of the Association for Computational Linguistics (ACL)

official preprint code

Complexity/informativeness

trade-off in the domain of indefinite pronouns

Milica Denic, Shane Steinert-Threlkeld, Jakub Szymanik, Proceedings of Semantics and Linguistic Theory (SALT 30)

official

preprint code

Semantic

Expressivism for Epistemic Modals

Peter Hawke and Shane Steinert-Threlkeld (alphabetical order),

Linguistics and Philosophy, forthcoming.

official (open

access)

preprint

Most,

but not more than half is proportion-dependent and sensitive to individual

differences

Sonia Ramotowska, Shane Steinert-Threlkeld, Leendert van Maanen, Jakub Szymanik,

Proceedings

of Sinn und Bedeutung (SuB 24)

official

preprint

Towards the Emergence of Non-trivial

Compositionality

Shane Steinert-Threlkeld,

Philosophy of Science, forthcoming.

official

preprint

code

An

Explanation of the Veridical Uniformity Universal

Shane Steinert-Threlkeld

Journal of Semantics, vol 37 no 1, pp.

129-144.

official (open

access) preprint code

2019

Quantifiers

in

natural language optimize the simplicity/informativeness trade-off

Shane Steinert-Threlkeld, Proceedings of the 22nd

Amsterdam

Colloquium, eds. Julian J. Schlöder, Dean McHugh & Floris

Roelofsen, pp. 513-522.

official preprint code

poster

Learnability

and Semantic Universals

Shane Steinert-Threlkeld and Jakub Szymanik, Semantics &

Pragmatics, vol 12 issue 4.

early access

code

The emergence of monotone quantifiers via iterated

learning

Fausto Carcassi, Shane Steinert-Threlkeld (co-first), and Jakub Szymanik,

Proceedings of the

41st Annual Meeting of the Cognitive Science Society

(CogSci

2019).

preprint code

Complexity and learnability in the explanation of

semantic

universals

Iris van de Pol, Shane Steinert-Threlkeld, and Jakub Szymanik, Proceedings of the

41st

Annual Meeting of the Cognitive Science Society (CogSci

2019).

preprint code

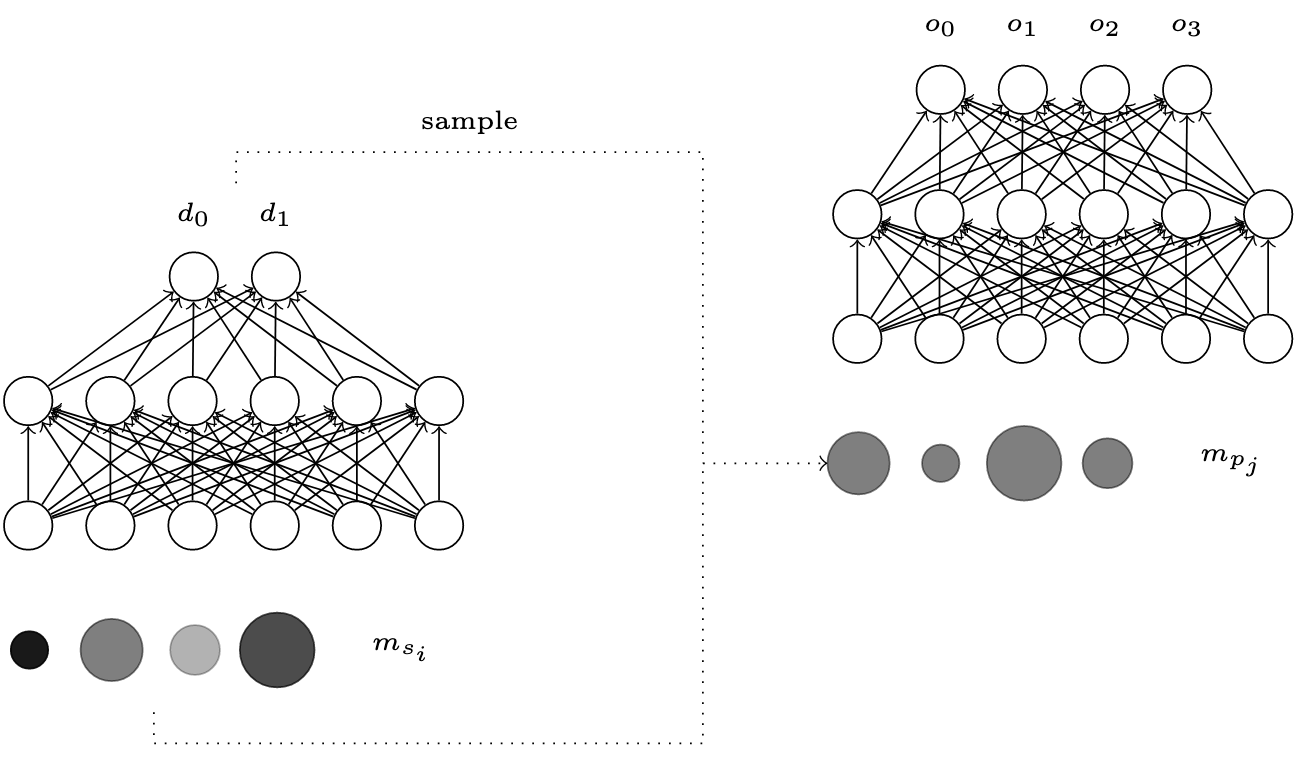

Neural Models of the

Psychosemantics of

"Most"

Lewis O'Sullivan and Shane Steinert-Threlkeld, Proceedings of the 9th Workshop on Cognitive Modeling and Computational

Linguistics

(CMCL2019).

official poster code

2018

Paying Attention to Function Words

Shane Steinert-Threlkeld, Emergent Communication Workshop @ 32nd Conference on Neural Information Processing Systems (NeurIPS

2018).

paper poster code

Some of them can Be Guessed! Exploring

the

Effect of Linguistic Context in Predicting Quantifiers

Sandro Pezzelle, Shane Steinert-Threlkeld, Raffaella Bernardi, Jakub Szymanik,

Proceedings

of the 56th Annual Meeting of the Association for

Computational

Linguistics (ACL 2018).

official code

Informational Dynamics of

Epistemic

Possibility Modals

Peter Hawke and Shane Steinert-Threlkeld

Synthese, vol 195 no 10,

pp. 4309-4342.

official

2016

Compositional Signaling in a Complex

World

Shane Steinert-Threlkeld, Journal of Logic, Language, and

Information, vol 25 no 3, pp. 379-397.

official

code

Compositionality and Competition in

Monkey

Alert Calls

Shane Steinert-Threlkeld

Theoretical Linguistics, vol 42 no 1-2,

pp. 159-171.

official local

Currently Dormant

The Weighted Möbius Score: A Unified Framework for Feature Attribution

Yifan Jiang, Shane Steinert-Threlkeld

preprint

Learning Compositional Negation in

Populations of

Roth-Erev and Neural Agents

Graham Todd, Shane Steinert-Threlkeld, Christopher Potts

preprint

Resources

ULTK: The Unnatural Language Toolkit: this is a Python library (in very active development) to facilitate research using unnatural languages, especially in semantic typology. Current tools focus on efficient communication and grammars for expressions in a language-of-thought.

lm-training: a skeleton for config-driven training of language models on your own data, using HuggingFace. Includes the ability to use HF's Trainer to train recurrent models in addition to transformers.

The Modal Typology Database: a database recording observations about the semantic typology of modals in natural language, focusing on the force-flavor pairs that they can express. This is a growing resource, designed to be easy to contribute to, so please consider so doing!

edugrad: a minimal re-implementation of the PyTorch API for building dynamic computation graphs and computing gradients via backpropagation, designed for pedagogical purposes.